Have you ever noticed that your blog posts are indexed in blog search in few minutes but it doesn’t come so fast in the main index? Why some of the posts are indexed faster than some of the other posts? Are sitemaps really helpful for indexing? How can you get your posts indexed faster?

All the questions really hit us much of the time. The answers are explained here.

In this post we will see:

1. The Main Difference between Indexing and Crawling

2. Main Use of Sitemaps and pings

3. Which blog posts are indexed faster

4. Google Canonicalization of the Crawled Contents

1. Difference between Indexing and Crawling

When you post an new content in your blog, Google may crawl your post but may not index it in the main index. In case of blogs Google blog search may crawl your new URLs within few minutes but it may not to the main index.

Even though Google crawls your post it doesn’t mean that the post will be indexed. Google follows certain standardization process according to its policies that the crawled content is first analyzed for quality content and then sent to the main Index. You can read further how this standardization is done below. So crawling and Indexing in Google are different.

2. Main Use of Sitemaps and pings

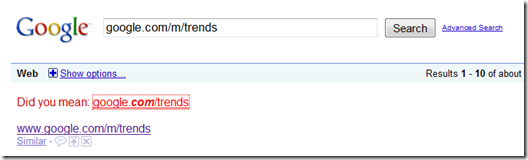

If you think that by submitting your sitemap to Google or by pinging to Google your posts will be definitely indexed. Google uses the sitemaps submitted to it to crawl the content, new URLs and finds out the quality content to get them indexed in Google. Google does not guarantee that by submitting your sitemap your content will be indexed. Again the Canonicalization process comes in to index the contents.

3. Which blog posts are indexed faster

This is really a big question mark. Well explaining according to Google, posts or contents from well knows blogs(popular ones) and which have good quality contents will be indexed faster. Popular blogs with good contents will be will be frequently crawled and index. So if you want your posts to get indexed faster, then get your blog well known and post good content.

Now your question will be what is good content! You can find the answer below.

4. Google Canonicalization of the Crawled Contents

Google has its own set of rules and policies that are applied to the crawled contents and decides whether to index the content or not. This process is the Google Canonicalization process which is done to produce relevant and quality results. So any blog post with good content will pass this standardization process will be indexed in a short duration of time. As far as we have seen, good content is the one which has a unique style and which has more keywords that are searched frequently in Google. But the process of Canonicalization frequently changes as the policies change, so we cannot define which are Good posts permanently as far as Google is concerned.

So you have learnt that in order to get your contents indexed faster you need to:

- Popularize your blog

- Produce Good and Quality content

- Use relevant Keywords

- Have a Unique Style of your own (Do not Copy and Paste)

You can watch Google explaining the details of blog indexing below:

Disclaimer: The content above are the sole views of the author not of www.techrena.net which is only the medium of communication. www.techrena.net does not take any responsibility of any incident resulting from the information provided here.